- But it is better to have it in place to avoid unnecessary problems. In order to avoid error, download winutils.exe binary and add the same to the classpath. Error: java.io.IOException: Could not locate executable null bin winutils.exe in the Hadoop binaries. Sample Code: The following program counts the number of lines containing the character.

- HADOOP-11003 org.apache.hadoop.util.Shell should not take a dependency on binaries being deployed when used as a library Resolved HADOOP-10775 Shell operations to fail with meaningful errors on windows if winutils.exe not found.

- Status:Closed

- Resolution: Not A Problem

- Fix Version/s: None

- Labels:

C:UsersWEI>pyspark

Python 3.5.6 |Anaconda custom (64-bit)| (default, Aug 26 2018, 16:05:27) [MSC v.

1900 64 bit (AMD64)] on win32

Type 'help', 'copyright', 'credits' or 'license' for more information.

2018-09-14 21:12:39 ERROR Shell:397 - Failed to locate the winutils binary in th

e hadoop binary path

java.io.IOException: Could not locate executable nullbinwinutils.exe in the Ha

doop binaries.

at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:379)

at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:394)

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:387)

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:80)

at org.apache.hadoop.security.SecurityUtil.getAuthenticationMethod(Secur

ityUtil.java:611)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupI

nformation.java:273)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(Use

rGroupInformation.java:261)

at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(

UserGroupInformation.java:791)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGrou

pInformation.java:761)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGr

oupInformation.java:634)

at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils

.scala:2467)

at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils

.scala:2467)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.util.Utils$.getCurrentUserName(Utils.scala:2467)

at org.apache.spark.SecurityManager.<init>(SecurityManager.scala:220)

at org.apache.spark.deploy.SparkSubmit$.secMgr$lzycompute$1(SparkSubmit.

scala:408)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSub

mit$$secMgr$1(SparkSubmit.scala:408)

at org.apache.spark.deploy.SparkSubmit$$anonfun$doPrepareSubmitEnvironme

nt$7.apply(SparkSubmit.scala:416)

at org.apache.spark.deploy.SparkSubmit$$anonfun$doPrepareSubmitEnvironme

nt$7.apply(SparkSubmit.scala:416)

at scala.Option.map(Option.scala:146)

at org.apache.spark.deploy.SparkSubmit$.doPrepareSubmitEnvironment(Spark

Submit.scala:415)

at org.apache.spark.deploy.SparkSubmit$.prepareSubmitEnvironment(SparkSu

bmit.scala:250)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:171)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:137)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

2018-09-14 21:12:39 WARN NativeCodeLoader:62 - Unable to load native-hadoop lib

rary for your platform... using builtin-java classes where applicable

Setting default log level to 'WARN'.

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLeve

l(newLevel).

Welcome to

____ __

/ _/_ ___ ____/ /_

/ _ / _ `/ __/ '/

/__ / ._/_,// //_ version 2.3.1

/_/

Using Python version 3.5.6 (default, Aug 26 2018 16:05:27)

SparkSession available as 'spark'.

>>>

- Assignee:

- Unassigned

- Reporter:

- WEI PENG

HADOOP-11003 org.apache.hadoop.util.Shell should not take a dependency on binaries being deployed when used as a library Resolved HADOOP-10775 Shell operations to fail with meaningful errors on windows if winutils.exe not found.

- Votes:

- 0Vote for this issue

- Watchers:

- 3Start watching this issue

- Created:

- Updated:

- Resolved:

Build your own Hadoop distribution in order to make it run on Windows 7 with this in-depth tutorial.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

I have searched on Google and found that Hadoop provides native Windows support from version 2.2 and above, but for that we need to build it on our own, as official Apache Hadoop releases do not provide native Windows binaries. So this tutorial aims to provide a step by step guide to Build Hadoop binary distribution from Hadoop source code on Windows OS. This article will also provide instructions to setup Java, Maven, and other required components. Apache Hadoop is an open source Java project, mainly used for distributed storage and large data processing. It is designed to scale horizontally on the go and to support distributed processing on multiple machines. You can find more about Hadoop at http://hadoop.apache.org/.

Could not locate executable null bin winutils. Winutils binary in the hadoop binary path java.io.IOException: Could not locate executable null bin winutils.exe.

Author's GitHub

I have created a bunch of Spark-Scala utilities at https://github.com/gopal-tiwari, might be helpful in some other cases.

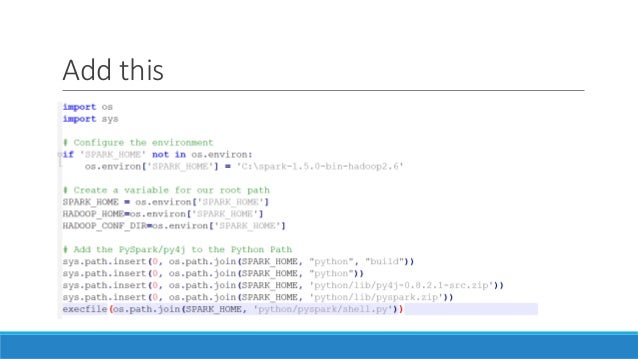

Solution for Spark Errors

Many of you may have tried running Spark on Windows OS and faced an error in the console (shown below). This is because your Hadoop distribution does not contain native binaries for Windows OS, as they are not included in the official Hadoop Distribution. So you need to build Hadoop from its source code on your Windows OS.

Solution for Hadoop Error

This error is also related to the Native Hadoop Binaries for Windows OS. So the solution is the same as the above Spark problem, in that you need to build it for your Windows OS from Hadoop's source code.

So just follow this article and at the end of the tutorial you will be able to get rid of these errors by building a Hadoop distribution. Cabinet vision solid 5.0 download.

For this article I’m following the official Hadoop building guide at https://svn.apache.org/viewvc/hadoop/common/branches/branch-2/BUILDING.txt

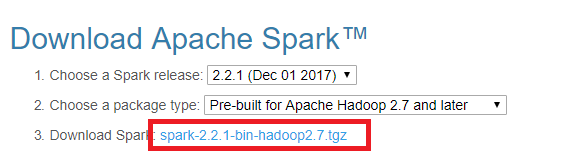

Downloading the Required Files

Download Links

Download Hadoop source from http://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-2.7.2/hadoop-2.7.2-src.tar.gz

Download Microsoft .NET Framework 4 (Standalone Installer) from https://www.microsoft.com/en-in/download/details.aspx?id=17718

Download Windows SDK 7 Installer from https://www.microsoft.com/en-in/download/details.aspx?id=8279, or you can also use offline installer ISO from https://www.microsoft.com/en-in/download/details.aspx?id=8442. You will find 3 different ISOs to download:

GRMSDK_EN_DVD.iso (x86)

GRMSDKX_EN_DVD.iso (AMD64)

GRMSDKIAI_EN_DVD.iso (Itanium)

Please choose based on your OS type.

Download JDK according to your OS & CPU architecture from http://www.oracle.com/technetwork/java/javase/downloads/index.html

Download and install 7-zip from http://www.7-zip.org/download.html

Download and extract Maven 3.0 or later from https://maven.apache.org/download.cgi

Download ProtocolBuffer 2.5.0 from https://github.com/google/protobuf/releases/download/v2.5.0/protoc-2.5.0-win32.zip

Download CMake 3.5.0 or higher from https://cmake.org/download/

Download Cygwin installer from https://cygwin.com/

NOTE: We can use Windows 7 or later for building Hadoop. In my case I have used Windows Server 2008 R2.

NOTE: I have used a freshly installed OS and removed all .NET Framework and C++ redistributables from the machine as they will be getting installed with Windows SDK 7.1. We are also going to install .NET Framework 4 in this tutorial. If you have any Visual Studio versions installed on your machine then this is likely to cause issues in the build process because of version mismatch of some .NET components and in some cases will not allow you to install Windows SDK 7.1.

Installation

A. JDK

1. Start JDK installation, click next, leave installation path as default, and proceed.

2. Again, leave the installation path as default for JRE if it asks, click next to install, and then click close to finish the installation.

3. If you didn't change the path during installation, your Java installation path will be something like 'C:Program FilesJavajdk1.8.0_65'.

4. Now right click on My Computer and select Properties, then click on Advanced or go to Control Panel > System > Advanced System Settings.

5. Click the Environment Variables button.

6. Hit the New button in the System Variables section then type JAVA_HOME in the Variable name field and give your JDK installation path in the Variable value field.

a. If the path contains spaces, use the shortened path name, for example “C:Progra~1Javajdk1.8.0_74” for Windows 64-bit systems

i. Progra~1 for 'Program Files'

ii. Progra~2 for 'Program Files(x86)'

7. It should look like:

8. Now click OK.

9. Search for the Path variable in the “System Variable” section in the “Environment Variables” dialogue box you just opened.

10. Edit the path and type “;%JAVA_HOME%bin” at the end of the text already written there just like the image below:

11. To confirm your Java installation, just open cmd and type “java –version.” You should be able to see the version of Java you just installed.

If your command prompt somewhat looks like the image above, you are good to go. Otherwise, you need to recheck whether your setup version is matching with the OS architecture (x86, x64) or if the environment variables path is correct or not.

B. .NET Framework 4

1. Double-click on the downloaded offline installer of Microsoft .NET Framework 4. (i.e. dotNetFx40_Full_x86_x64.exe).

2. When prompted, accept the license terms and click the install button.

3. At the end, just click finish and it’s done.

C. Windows SDK 7

1. Now go to Uninstall Programs and Features windows from My Computer or Control Panel.

2. Uninstall all Microsoft Visual C++ Redistributables, if they got installed with the OS because they may be a newer version than the one which Win SDK 7.1 requires. During SDK installation they will cause errors.

3. Now open your downloaded Windows 7 SDK ISO file using 7zip and extract it to C:WinSDK7 folder. You can also mount it as a virtual CD drive if you have that feature.

You will have following files in your SDK folder:

5. Now open your Windows SDK folder and run setup.

6. Follow the instructions and install the SDK.

Winutils.exe Hadoop 2.7.7 Download

7. At the end when you get a window saying Set Help Library Manager, click cancel.

D. Maven

1. Now extract the downloaded Maven zip file to a C drive.

2. For this tutorial we are using Maven 3.3.3.

3. Now open the Environment Variables panel just like we did during JDK installation to set M2_HOME.

4. Create a new entry in System Variables and set the name as M2_HOME and value as your Maven path before the bin folder, for example: C:Maven-3.3.3. Just like the image below:

5. Now click OK.

6. Search for the Path variable in the “System Variable” section, click the edit button, and type “;%M2_HOME%bin” at the end of the text already written there just like the image below:

7. To confirm your Maven installation just open cmd and type “mvn –v.” You should be able to see what version of Maven you just installed.

If your command prompt looks like the image above, you are done with Maven. Atomic email hunter crack.

E. Protocol Buffer 2.5.0

1. Extract Protocol Buffer zip to C:protoc-2.5.0-win32.

2. Now we need to add in the “Path” variable in the Environment System Variables section, just like the image below:

3. To check if the protocol buffer installation is working fine just type command “protoc --version”

Your command prompt should look like this.

F. Cygwin

1. Download Cygwin according to your OS architecture

a. 64 bit (setup-x86_64.exe)

b. 32 bit (setup-x86.exe)

2. Start Cygwin installation and choose 'Install from Internet' when it asks you to choose a download source, then click next.

Cached

3. Follow the instructions further and choose any Download site when prompted. If it fails, try any other site from the list and click next:

4. Once the download is finished, it will show you the list of default packages to be installed. According to the Hadoop official build instructions (https://svn.apache.org/viewvc/hadoop/common/branches/branch-2/BUILDING.txt?view=markup) we only need six packages: sh, mkdir, rm, cp, tar, gzip. For the sake of simplicity, just click next and it will download all the default packages.

Spark Hadoop Winutils.exe

5. Once the installation is finished, we need to set the Cygwin installation folder to the System Environment Variable 'Path.' To do that, just open the Environment Variables panel.

6. Edit the Path variable in the “System Variable” section, add a semicolon, and then paste your Cygwin installation path into the bin folder. Just like the image below:

7. You can verify Cygwin installation just by running command “uname -r” or “uname -a” as shown below:

G. CMake

1. Run the downloaded CMake installer.

2. On the below screen make sure you choose “Add CMake to the PATH for all users.”

Scala - Java.io.IOException: Could Not Locate Executable Null ...

3. Now click Next and follow the instructions to complete the installation.

Winutils Spark

4. To check that the CMake installation is correct, open a new command prompt and type “cmake –version.” You will be able to see the cmake version installed.

Building Hadoop

Winutils.exe Hadoop 3.2.1

1. Open your downloaded Hadoop source code file, i.e. hadoop-2.7.2-src.tar.gz with 7zip.

2. Inside that you will find 'hadoop-2.7.2-src.tar' — double click on that file.

Spark 1.6-Failed To Locate The Winutils Binary In The Hadoop ...

3. Now you will be able to see Hadoop-2.7.2-src folder. Open that folder and you will be able to see the source code as shown here:

4. Now click Extract and give a short path like C:hdp and click OK. If you give a long path you may get a runtime error due to Windows' maximum path length limitation.

5. Once the extraction is finished we need to add a new “Platform” System Variable. The values for the platform will be:

a. x64 (for 64-bit OS)

b. Win32 (for 32-bit OS)

Please note that the variable name Platform is case sensitive. So do not change lettercase.

6. To add a Platform in the System variable, just open the Environment variables dialogue box, click on the “New…” button in the System variable section, and fill the Name and Value text boxes as shown below:

7. So before we proceed with the build, just have a look on the state of all installed programs on my machine:

Winutils.exe Hadoop 2.7 Download

8. Also check the structure of Hadoop sources extracted on the C drive:

9. Open a Windows SDK 7.1 Command Prompt window from Start --> All Programs --> Microsoft Windows SDK v7.1, and click on Windows SDK 7.1 Command Prompt.

10. Change the directory to your extracted Hadoop source folder. For this tutorial its C:hdp by typing the command cd C:hdp.

11. Now type command mvn package -Pdist,native-win -DskipTests -Dtar

NOTE: You need a working internet connection as Maven will try to download all required dependencies from online repositories.

12. If everything goes smoothly it will take around 30 minutes. It depends upon your Internet connection and CPU speed.

13. If everything goes well you will see a success message like the below image. Your native Hadoop distribution will be created at C:hdphadoop-disttargethadoop-2.7.2

How To Repair The Missing Winutils.exe Error In The Big Data ...

14. Now open C:hdphadoop-disttargethadoop-2.7.2. You will find “hadoop-2.7.2.tar.gz”. This Hadoop distribution contains native Windows binaries and can be used on a Windows OS for Hadoop clusters.

15. For running a Hadoop instance you need to change some configuration files like hadoop-env.cmd, core-site.xml,hdfs-site.xml, slaves, etc. For those changes please follow this official link to setup and run hadoop on windows: https://wiki.apache.org/hadoop/Hadoop2OnWindows.

Like This Article? Read More From DZone

Free DZone Refcard